View-based Explanations for Graph Neural Networks

A summary of the SIGMOD

2024 research paper by

Tingyang Chen, Dazhuo Qiu, Yinghui Wu, Arijit Khan, Xiangyu Ke, and Yunjun Gao

Background

[Graph Classification, Graph Neural Networks, and Explainability]. Graph

classification is essential for several real-world tasks such as drug

discovery, text classification, and recommender system [1, 2, 3]. The rising

graph neural networks (GNNs) have exhibited great potential in graph

classification across many real domains, e.g., social networks, chemistry, and

biology [4, 5, 6, 7]. Given a database G as a set of graphs, and a set of class

labels Ł, GNN-based graph classification aims to learn a GNN as a classifier M,

such that each graph 𝐺 ∈ G is assigned a correct label M (𝐺) ∈ Ł.

GNNs are “black-box” — it is

inherently difficult to understand which aspects of the input data drive the

decisions of the network. Explainability can improve the model’s transparency

related to fairness, privacy, and other safety challenges, thus enhancing the

trust in decision-critical applications. Graph data are complex, noisy, and

content-rich. End users and domain scientists (e.g., data scientists, business

analysts, chemists) often find them difficult to query and explore. Hence, it

is even more critical to provide human-understandable explanations over

classification results on such datasets [8, 9, 10].

It remains a desirable yet

nontrivial task to explain the results of high-quality GNN classifiers for

domain experts [10]. Given M and G, one wants to discover a critical fraction

of G that is responsible for the occurrence of specific class labels of

interest, assigned by GNN M over G. In particular, such explanation structures

should (1) offer “finer-grained” insights by capturing how

certain features, nodes, or subgraphs contribute to specific classifications in

G; (2) be “queryable”, hence are easy for human experts to access

and inspect with domain knowledge; and (3) be “configurable” to

enable users with the flexibility to freely select a designated number of nodes

from different classes, to obtain comprehensive and detailed explanations tailored

to their classes of interest.

Challenges

with Existing GNN Explainability Methods. State-of-the-art

GNN explainability approaches [11, 12, 13, 14, 15, 16, 17, 18, 19] are limited

to providing explanations for individual instances or specific class labels.

The main focus of these methods is on defining explanations as crucial input

features, often in the shape of numerical encoding [10]. These methods often

fall short in providing targeted and configurable explanations for multiple

class labels of interest. Additionally, existing methods may return large or an

excessive number of explanation structures, hence are not easily comprehensible

(Figure 1). Moreover, these explanation structures often lack direct

accessibility and cannot be queried easily, posing a challenge for expert users

who seek to inspect the specific reasoning behind a GNN’s decision based on

domain knowledge (Table 1).

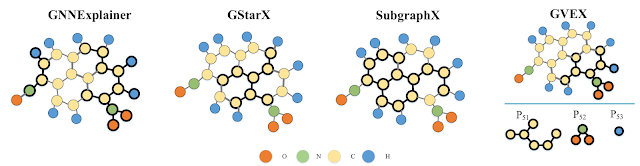

Figure 1. Existing GNN explainability methods such as GNNExplainer and SubgraphX produce larger explanation subgraphs compared to GVEX (ours). Additionally, our explanation view breaks down such subgraphs into smaller components that may appear multiple times, facilitating easier access and exploration.

Table 1. Comparison of our GVEX technique with state-of-the-art GNN explanation methods. Here “Learning” denotes whether (node/edge mask) learning is required, “Task” means what downstream tasks each method can be applied to (GC/NC: graph/ node classification), “Target” indicates the output format of explanations (E/NF: Edge/Node Features), “Model-agnostic”(MA) means if the method treats GNNs as a black-box during the explanation stage (i.e., the internals of the GNN models are not required), “Label-specific"(LS) means if the explanations can be generated for a specific class label; “Size-bound”(SB) means if the size of explanation is bounded; “Coverage” means if the coverage property is involved, “Config” means if users can configure the method to generate explanations for designated class labels; “Queryable” means if the explanations are directly queryable.

Our Motivation. Consider the following real-world example.Example. In

drug discovery, mutagenicity refers to the ability of a chemical compound to

cause mutation. It is an adverse property of a molecule that hampers its

potential to become a marketable drug and has been of great interest in the

field. An emerging application of GNNs is to classify chemical compound as

graphs in terms of mutagenicity to support effective drug discovery [20, 21].

Consider

a real-world molecular dataset represented as graphs in Figure 2. A GNN

classifies four graphs {𝐺1, 𝐺2, 𝐺3, 𝐺4} into two groups with class labels “Mutagens” and

“Nonmutagens”, respectively, based on whether they have mutagenicity property.

A medical analyst seeks to understand “why” in particular the first two

chemical compounds {𝐺1, 𝐺2} are recognized as mutagens, “what” are critical molecular

substructures that may lead to such classification results, and further wants

to search for and compare the difference between these compounds that

contribute to their mutagenicity using domain knowledge. The large number of

chemical graphs and larger explanation sizes make a direct inspection of GNN results

challenging. For example, it is difficult to discern whether the binding of

multiple carbon rings or the presence of hydrogen atoms on the carbon rings

plays a decisive role in GNN-based classification to decide mutagenicity.

Figure 2. GVEX: Our two-tier explanations for GNN-based drug classification. Subgraphs represent "lower-tier" explanation structures. Patterns represent "higher-tier" queryable structures. The three configuration scenarios at the bottom indicate whether the user prefers only one class or is interested in the nature of both classes.

Based on domain knowledge, toxicophores are substructures of chemical compounds that indicate an increased potential for mutagenicity. For example, the aromatic nitro group is a well-known toxicophore for mutagenicity [22]. Such structures can be readily encoded as “graph views” with a two-tier structure, where toxicophores are expressed as graph patterns that summarize common substructures of a set of “explanation” subgraphs, as illustrated in Figure 2. The upper left corner of the figure shows two graph patterns {𝑃11, 𝑃12} and corresponding subgraphs that explain “why” the compounds 𝐺1 and 𝐺2 have mutagenicity. Indeed, we find that (1) if these subgraphs are removed from 𝐺1 and 𝐺2, the remaining part can no longer be recognized by the same GNN as mutagens; and (2) two of the patterns 𝑃11 and 𝑃12 are real toxicophores as verified by domain experts. Similarly, the middle right corner depicts subgraphs with common structures summarized by graph patterns 𝑃21 to 𝑃22, which are responsible for nonmutagens. Surprisingly, 𝑃21 and 𝑃22 are also toxicophores as per domain knowledge. Therefore, such patterns can be suggested to the analysts for further inspection, or be conveniently issued as graph queries for downstream analysis, e.g., “which toxicophores occur in mutagens?” “Which nonmutagens contain the toxicophore pattern 𝑃22?”

In

addition, the analyst wants to understand representative substructures that are

discriminative enough to distinguish mutagens and nonmutagens. This can be

captured by the specific toxicophore 𝑃12 that covers the nodes in all two chemical compounds {𝐺1, 𝐺2} with label

“mutagens”, but does not occur in nonmutagens {𝐺3, 𝐺4}. These graph

patterns, along with their matched subgraphs, provide concise and queryable

structures to humans, enabling a more intuitive understanding of the GNN-based

mutagenicity analysis.

Last

but not the least, users should have the flexibility to configure different scenarios

based on their preference for a single class or interest in the characteristics

of both classes, e.g., they would like to understand representative

substructures that are discriminative enough to distinguish mutagens and

nonmutagens. For the mutagens label, 𝑁𝑂2 is a real toxicophore (i.e., a pattern that indicates an

increased potential for mutagenicity) as verified by domain experts [3]. In

contrast, users find 𝑁𝐻2 as a common pattern

in the nomutagens label. When users attempt to understand both classes, our

method GVEX presents the salient patterns of both classes, shedding light on

important patterns that occur in this molecular dataset (e.g., three

configuration scenarios at the bottom of Figure 2).

Our

Solution [Two-tier Explanation Structure: Explanation Views]. We propose GVEX, a novel framework that generates Graph

Views for GNN EXplanation. We design a two-tier explanation structure called

explanation views. An explanation view comprises a collection of graph patterns

along with a set of induced explanation subgraphs. Given a database G of

multiple graphs and a specific class label l assigned by a GNN-based classifier M, lower-tier subgraphs

provide insights into the reasons behind the assignment of l by M. They serve as both factual (that preserves the

result of classification) and counterfactual explanations (which flips the result

if removed). On the other hand, the higher-tier patterns summarize the

subgraphs using common substructures for efficient search and exploration of

these subgraphs (Figure 2).

Our

Contributions.

(1) We introduce explanation views, a novel class of explanation structure for GNN-based graph classification. An explanation view is a two-tier structure that consists of graph patterns and a set of explanation subgraphs induced from graphs via graph pattern matching, such that (a) the subgraphs are responsible for the occurrence of specific class label 𝑙 of user’s interest, and (b) the patterns summarize the details of explanation subgraphs as common substructures for efficient search and comparison of these subgraphs.

(2) For explanation views, we introduce a set of quality measures in terms of explainability and coverage properties. We formulate the problem to compute the optimal explanation views for GNN-based graph classification. The problem is in general Σ2P-hard, and even remains 𝑁𝑃-hard for a special case when G has no edge.

(3) We present GVEX, an algorithmic solution for generating graph views to explain GNNs. (a) We first introduce an approximation scheme that follows an “explain-and-summarize” strategy. The method first computes a set of node-induced subgraphs with guaranteed explainability, by identifying important nodes with the maximum diversified feature influence. We then summarize these subgraphs into graph patterns that ensures to cover all such nodes, and meanwhile, introduce small edge coverage error. This guarantees an overall 1/2 -approximation for the view generation problem. (b) We further develop a streaming algorithm that avoids generation of all explanation subgraphs. The algorithm processes a batched stream of nodes and incrementally maintains explanation views under the coverage constraint, with an approximation ratio 1/4 relative to the processed fraction of graphs.

(4) Using real-world graphs and representative GNNs, we experimentally verify that our view generation algorithms can effectively retrieve and summarize substructures to explain GNN-based classification. We also showcase that our algorithms can support GNN-based drug design and social analysis well.

For more information, click here for

our paper. Code: GitHub.

Blog post contributed by: Arijit Khan

References:

[1] W. Hu, M. Fey, M. Zitnik, Y. Dong, H.

Ren, B. Liu, M. Catasta, and J. Leskovec. 2020. Open graph benchmark: Datasets

for machine learning on graphs. Advances in neural information processing systems

33 (2020), 22118–22133.

[2] S. Wu, F. Sun, W. Zhang, X. Xie, and B.

Cui. 2022. Graph neural networks in recommender systems: a survey. Comput.

Surveys 55, 5 (2022), 1–37.

[3] L. Yao, C. Mao, and Y. Luo. 2019. Graph

convolutional networks for text classification. In AAAI Conference on

Artificial Intelligence, Vol. 33. 7370–7377.

[4] E. Cho, S. A Myers, and J. Leskovec.

2011. Friendship and mobility: user movement in location-based social networks.

In ACM International Conference on Knowledge Discovery and Data Mining (KDD).

1082–1090.

[5] L. Wei, H. Zhao, Z. He, and Q. Yao. 2023.

Neural architecture search for GNN-based graph classification. ACM Transactions

on Information Systems (2023).

[6] J. You, B. Liu, Z. Ying, V. Pande, and J.

Leskovec. 2018. Graph convolutional policy network for goal-directed molecular

graph generation. Advances in Neural Information Processing Systems (NeurIPS)

31 (2018).

[7] M. Zitnik, M. Agrawal, and J. Leskovec.

2018. Modeling polypharmacy side effects with graph convolutional networks.

Bioinformatics 34, 13 (2018), i457–i466.

[8] M. Du, N. Liu, and X.

Hu, “Techniques for Interpretable Machine Learning”, Commun. ACM, vol. 63, no.

1, 2020.

[9] Z. C. Lipton, “The

Mythos of Model Interpretability”, Commun. ACM, vol. 61, no. 10, 2018.

[10] H. Yuan, H. Yu, S.

Gui, and S. Ji, “Explainability in graph neural networks: A taxonomic survey,”

IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 5,

pp. 5782–5799, 2023.

[11] R. Schwarzenberg, M.

Hubner, D. Harbecke, C. Alt, and L. Hennig, “Layerwise relevance visualization

in convolutional text graph classifiers,” in Workshop on Graph-Based Methods

for Natural Language Processing (TextGraphs@EMNLP), 2019, pp. 58–62.

[12] Q. Huang, M. Yamada,

Y. Tian, D. Singh, and Y. Chang, “Graphlime: Local interpretable model

explanations for graph neural networks,” IEEE Transactions on Knowledge and

Data Engineering, 2022.

[13] M. S. Schlichtkrull,

N. De Cao, and I. Titov, “Interpreting graph neural networks for nlp with

differentiable edge masking,” in International Conference on Learning

Representations (ICLR), 2021.

[14] D. Luo, W. Cheng, D.

Xu, W. Yu, B. Zong, H. Chen, and X. Zhang, “Parameterized explainer for graph

neural network,” Advances in Neural Information Processing Systems (NeurIPS),

vol. 33, pp. 19 620–19 631, 2020.

[15] Z. Ying, D. Bourgeois,

J. You, M. Zitnik, and J. Leskovec, “Gnnexplainer: Generating explanations for

graph neural networks,” Advances in Neural Information Processing Systems

(NeurIPS), vol. 32, 2019.

[16] H. Yuan, H. Yu, J.

Wang, K. Li, and S. Ji, “On explainability of graph neural networks via

subgraph explorations,” in International Conference on Machine Learning (ICML),

2021, pp. 12 241–12 252.

[17] H. Yuan, J. Tang, X.

Hu, and S. Ji, “Xgnn: Towards model-level explanations of graph neural

networks,” in ACM International Conference on Knowledge Discovery and Data

Mining (KDD), 2020, pp. 430–438.

[18] Z. Huang, M. Kosan, S.

Medya, S. Ranu, and A. Singh, “Global counterfactual explainer for graph neural

networks,” in ACM International Conference on Web Search and Data Mining

(WSDM), 2023, pp. 141–149.

[19] S. Zhang, Y. Liu, N.

Shah, and Y. Sun, “Gstarx: Explaining graph neural networks with

structure-aware cooperative games,” in Advances in Neural Information

Processing Systems (NeurIPS), 2022.

[20] M. Jiang, Z. Li, S.

Zhang, S. Wang, X. Wang, Q. Yuan, and Z. Wei. 2020. Drug–target affinity

prediction using graph neural network and contact maps. RSC advances 10, 35

(2020), 20701–20712.

[21] J. Xiong, Z. Xiong, K.

Chen, H. Jiang, and M. Zheng. 2021. Graph neural networks for automated de novo

drug design. Drug Discovery Today 26, 6 (2021), 1382–1393.

[22] J. Kazius, R. McGuire,

and R. Bursi. 2005. Derivation and validation of toxicophores for mutagenicity prediction.

Journal of Medicinal Chemistry 48, 1 (2005), 312–320.

Comments

Post a Comment