Online Updates of Knowledge Graph Embedding

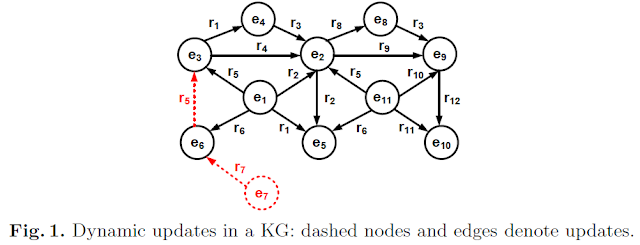

[Challenges] Consider the KG in Figure 1, the updates are denoted by dashed nodes and edges. Assume that we have embedded this KG using TransE [23], which should hold the translation relation: h + r ≈ t (vectors are represented as bold characters) for each triple (h, r, t), where h is a head entity, r is a relation, and t is a tail entity. After adding a new triple (e6, r5, e3), where e6, e3 are existing entities and r5 is an existing relation in the earlier version of the KG, we now need to satisfy e6 + r5 ≈ e5. No matter for which element in (e6, r5, e3) we decide to update its vector, it will break the translation relations h + r≈t for other triples containing our selected element, thereby creating a cascade of updates on the embedding of the entire KG. In summary, when a KG has local updates with addition and deletion of triples, if we revise the vectors of some entities and relations due to such updates, these revisions may cascade in the entire KG via connections among entities and relations, which is expensive.

We next propose an online learning algorithm to incrementally update the KG embedding.

(1) Following the inductive learning, we keep all learnt parameters in R-GCNs and the gate strategy unaffected.

(2) Contextual element embeddings of existing entities and relations also remain the same.

(3) After a KG update, for many entities and relations, their contexts remain unchanged, so their contextual subgraph embeddings would remain uninterrupted. Thus, with existing knowledge embeddings of such entities and relations, corresponding triples would satisfy: h⋆+r⋆≈t⋆. Hence, we also keep the knowledge embeddings of existing entities and relations unchanged so long as their contexts are unchanged.

(4)

What shall we do with an existing entity or relation having changed context?

Notice that its contextual subgraph embedding, a combination of context element

embeddings of its neighboring entities or relations, computed by the R-GCN,

will change to reflect this update. We next re-learn the knowledge embeddings of

existing entities and relations with changed contexts, and in that process we

adjust both their knowledge embeddings and joint embeddings, with the aim that

the joint embeddings, after such update, still approximately satisfy the

translations in the modified graph. In practice, we find that the modifications

happened in the joint embeddings are generally small due to local updates in a

KG, which explains why our method is effective in approximately preserving the

translations in the modified KG.

(5) In addition, we also learn knowledge embeddings and contextual element embeddings of emerging entities and relations.

In this way, our algorithm greatly reduces the number of triples which need to be retrained while preserving h⋆+r⋆≈t⋆ on the whole KG. This enables online learning with higher efficiency.

References

[2] X. L. Dong. 2018. Challenges and Innovations in Building a Product Knowledge Graph. In KDD.

[3] J. Lehmann, R. Isele, M. Jakob, A. Jentzsch, D. Kontokostas, P. N. Mendes, S. Hellmann, M. Morsey, P. van Kleef, S. Auer, and C. Bizer. 2015. DBpedia - A Large-Scale, Multilingual Knowledge Base Extracted from Wikipedia. Semantic Web 6, 2 (2015), 167–195.

[4] J. Hoffart, F. M Suchanek, K. Berberich, and G. Weikum. 2013. YAGO2: A Spatially and Temporally Enhanced Knowledge Base from Wikipedia. Artificial Intelligence 194 (2013), 28–61.

[5] K. D. Bollacker, C. Evans, P. Paritosh, T. Sturge, and J. Taylor. 2008. Freebase: A Collaboratively Created Graph Database for Structuring Human Knowledge. In SIGMOD.

[6] T. M. Mitchell, W. W. Cohen, E. R. Hruschka Jr., P. P. Talukdar, B. Yang, J. Betteridge, A. Carlson, B. D. Mishra, M. Gardner, B. Kisiel, J. Krishnamurthy, N. Lao, K. Mazaitis, T. Mohamed, N. Nakashole, E. A. Platanios, A. Ritter, M. Samadi, B. Settles, R. C. Wang, D. Wijaya, A. Gupta, X. Chen, A. Saparov, M. Greaves, and J.Welling. 2018. Never-Ending Learning. Commun. ACM 61, 5 (2018), 103–115.

[7] A. Gyrard, M. Gaur, K. Thirunarayan, A. P. Sheth, and S. Shekarpour. 2018. Personalized Health Knowledge Graph. In CKGSemStats@ISWC.

[8] Q.Wang, Z.Mao, B.Wang, and L. Guo. 2017. Knowledge Graph Embedding: A Survey of Approaches and Applications. IEEE Transactions on Knowledge and Data Engineering 29, 12 (2017), 2724–2743.

[9] M. Ali, M. Berrendorf, C. T. Hoyt, L. Vermue, M. Galkin, S. Sharifzadeh, A. Fischer, V. Tresp, and J. Lehmann. 2020. Bringing Light Into the Dark: A Large-scale Evaluation of Knowledge Graph Embedding Models Under a Unified Framework. CoRR abs/2006.13365 (2020).

[10] Y. Tay, A. T. Luu, and S. C. Hui. 2017. Non-Parametric Estimation of Multiple Embeddings for Link Prediction on Dynamic Knowledge Graphs. In AAAI.

[11] B. Yang, W.-t. Yih, X. He, J. Gao, and L. Deng. 2015. Embedding Entities and Relations for Learning and Inference in Knowledge Bases. In ICLR.

[12] M. Wang, L. Qiu, and X. Wang. 2021. A Survey on Knowledge Graph Embeddings for Link Prediction. Symmetry 13, 3 (2021), 485.

[13] Y. Zhao, A. Zhang, R. Xie, K. Liu, and X. Wang. 2020. Connecting Embeddings for Knowledge Graph Entity Typing. In ACL.

[14] Y. Wang, A. Khan, T. Wu, J. Jin, and H. Yan. 2020. Semantic Guided and Response Times Bounded Top-k Similarity Search over Knowledge Graphs. In ICDE.

[15] X. Chen, M. Chen, C. Fan, A. Uppunda, Y. Sun, and C. Zaniolo. 2020. Multilingual Knowledge Graph Completion via Ensemble Knowledge Transfer. In EMNLP (Findings).

[16] F. Zhang, N. Jing Yuan, D. Lian, X. Xie, and W.-Y. Ma. 2016. Collaborative Knowledge Base Embedding for Recommender Systems. In KDD.

[17] J. Shin, S. Wu, F. Wang, C. D. Sa, C. Zhang, and C. R´e. 2015. Incremental Knowledge Base Construction Using DeepDive. PVLDB 8, 11 (2015), 1310– 1321.

[18] X. Lin, H. Li, H. Xin, Z. Li, and L. Chen. 2020. KBPearl: A Knowledge Base Population System Supported by Joint Entity and Relation Linking. PVLDB 13, 7 (2020), 1035–1049.

[19] S. Hellmann, C. Stadler, J. Lehmann, and S. Auer. 2009. DBpedia Live Extraction. In OTM Conferences.

[20] Source for IMDB dataset. https://www.imdb.com/interfaces/.

[21] Use Deep Search to Explore the COVID-19 Corpus. https://www.research.ibm.com/covid19/deep-search/.

[22] M. Nickel, V. Tresp, and H.-P. Kriegel. 2011. A Three-Way Model for Collective Learning on Multi-Relational Data. In ICML.

[23] A. Bordes, N. Usunier, A. Garcia-Duran, J.Weston, and O. Yakhnenko. 2013. Translating Embeddings for Modeling Multi-Relational Data. In NIPS.

[24] Z. Wang, J. Zhang, J. Feng, and Z. Chen. 2014. Knowledge Graph Embedding by Translating on Hyperplanes. In AAAI.

[25] T. Trouillon, J. Welbl, S. Riedel, ´ E. Gaussier, and G. Bouchard. 2016. Complex Embeddings for Simple Link Prediction. In ICML.

[26] J. Feng, M. Huang, Y. Yang, and X. Zhu. 2016. GAKE: Graph Aware Knowledge Embedding. In COLING.

[27] T. Dettmers, P. Minervini, P. Stenetorp, and S. Riedel. 2018. Convolutional 2D Knowledge Graph Embeddings. In AAAI.

[28] M. Schlichtkrull, T. N. Kipf, P. Bloem, R. van den Berg, I. Titov, and M.Welling. 2018. Modeling Relational Data with Graph Convolutional Networks. In ESWC.

Comments

Post a Comment